- Indico style

- Indico style - inline minutes

- Indico style - numbered

- Indico style - numbered + minutes

- Indico Weeks View

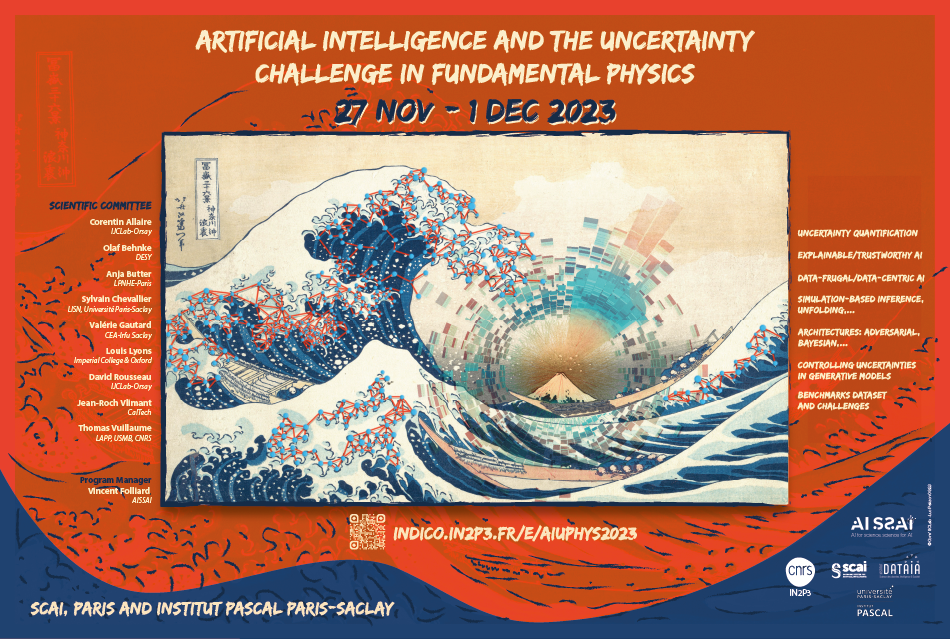

Artificial Intelligence and the Uncertainty challenge in Fundamental Physics

→

Europe/Paris

Description

The workshop is organised by CNRS AISSAI and CNRS IN2P3.

The integration of Artificial Intelligence (AI) into the realm of fundamental science is witnessing an unprecedented surge. However, there are specific challenges to be overcome:

- any measurement or prediction has to be provided with a precise confidence interval

- measurements rely on numerous inputs, each with inherent uncertainties and potential inter-correlations

- the trust in the measurement has to be communicated to peers

- an experiment complexity is between the breakout game and autonomous car: detailed, expensive, imperfect simulators exist

- each experimental device is a complex unique device, producing semi-structured data

- expensive data in the petabytes range

These challenges have been overcome in the past for extraordinarily complex measurements where the role of AI was not major (e.g. Higgs boson or gravitational wave discoveries). Also, Fundamental Science has decades-old big data culture.

This workshop aims to bring together experts from Fundamental Science, Computer Science and Statistics to exchange around the theme of Uncertainties, which has been broken down into the following themes:

- Monday PM : Opening session, Uncertainty Quantification, uncertainty prediction

- Tuesday AM : Explainable AI, trustworthy AI

- end of Tues AM, Tuesday PM : Simulation-Based Inference

- Wednesday AM : Data frugal approaches, Data-centric AI ; Benchmarks dataset and challenges

- Wednesday PM : Fair Universe hackathon

- Thu AM Unfolding (or de-biasing, de-blurring)

- End of Thu AM, Thu PM Controlling uncertainties in generative models

- Fri AM Architectures (Adversarial, Bayesian, ... )

- Fri PM Closing session

Each theme will see introductory talks by leading experts, followed by contributed talks.

Confirmed speakers list includes : Luca Biferale (U Roma 2), Mathias Backes (Kirchhof Institute), Sebastian Bieringer (U Hambourg), Jérôme Bobin (CEA-Saclay), Vincent Chabridon (EDF), Yuan Tuan Chou (U Washington), Vince Croft (NIKHEF-Amsterdam), Tommaso Dorigo (U Padova), Eva Govorkova (MIT), Marylou Gabrié (Ecole Polytechnique), Julien Girard-Satabin (CEA), Nathan Huetsch (U Heidelberg), Eiji Kawasaki (CEA), Gregor Kasiezcka (U Hamburg), Sabine Kraml (LPSC-Grenoble), Mikael Kuusela (Carnegie Mellon), Alessandro Leite (LISN), Gilles Louppe (U Liège), Mark Neubauer (U Illinois), Harrison Prosper (Florida State U), Radi Radev (CERN), Pedro Rodrigues (INRIA-Grenoble), Ozgur Sahin (CEA-Saclay), Simone Scardapane (U Roma), Sofia Schweitzer (U Heidelberg), Laurens Sluyterman (U Radboud), Gaël Varoquaux (INRIA-Saclay), Emmanuel Vazquez (L2S-Saclay), Wouter Verkerke (U Amsterdam), Gordon Watts (U Washington), Christoph Weniger (U Amsterdam), Philip Windischhofer (U Chicago), Burak Yelmen (U Tartu)

and most of the Fair Universe team : Yuan-Tang Chou (University of Washington), Wahid Bhimji (NERSC-Berkeley), Ragansu Chakkappai (IJCLab-Orsay), Yuan-Tang Chou (University of Washington), Sascha Diefenbacher (LBNL-Berkeley), Steven Farrell (NERSC-Berkeley), Elham Khoda (U Washington), David Rousseau (IJClab-Orsay), Ihsan Ullah (ChaLearn)

Also a special half-day hackathon on the Fair-Universe prototype challenge on Wednesday afternoon. The goal? Craft ML algorithms that can derive measurements with uncertainties from simulated LHC proton collisions, ensuring robustness against input uncertainties.

Registration and call for contribution

Registration is free (but mandatory). Registration for on-site participation (including monday cocktail and thursday workshop dinner) remains open until the last few available slots are distributed.

Remote full-time or part-time participation will be possible, although the focus will be on-site. The registration form allows to specify on-site participation with some granularity. The zoom link will be distributed to participants only.

The call for contributions is closed. Talks are expected to be given in person, with few exceptions.

Location

The workshop will take place from Monday 2PM till Tuesday evening in Paris at SCAI, Paris (on Jussieu campus) and Wednesday, Thursday, Friday 5PM at Institut Pascal Université Paris-Saclay.

And finally

A companion anomaly detection workshop will take place in Clermont-Ferrand 4-7th March 2024

All slides and recordings are available in the detailed agenda (Timetable). For recordings, we advise to right click and save, rather than play directly.

Participants

Adil Jueid

agrebi said

Alberto Carnelli

Alberto Prades

Alessandro Leite

Alexandre Boucaud

Aman Desai

Anja Butter

ANNA CORSI

Antonio D'Avanzo

Arsene Ferriere

Artemios Geromitsos

Aurelien Benoit-Levy

Aurore Savoy-Navarro

Aveksha Kapoor

Badr ABOU EL MAJD

Baptiste Abeloos

Baptiste Ravina

Binis Batool

Biswajit Biswas

Brigitte PERTILLE RITTER

Burak Yelmen

Cecile Germain

Cedric Auliac

Christoph Weniger

Christophe Kervazo

Christos Vergis

Corentin Santos

Cupic Vesna

Damien THISSE

Daniel Felea

Davide Valsecchi

DIMITRIOS TZIVRAILIS

Eiji Kawasaki

Elham E Khoda

Enzo Canonero

Eric Chassande-Mottin

Erik Bachmann

Esteban Andres Villalobos Gomez

Farida Fassi

Fedor Ratnikov

Gael Varoquaux

Gamze Sokmen

Gilles Grasseau

Gilles Louppe

Graham Clyne

Graziella Russo

Gregor Kasieczka

Harrison Prosper

Hubert Leterme

Ian Pang

Ignacio Segarra

Igor Slazyk

Ihsan Ullah

Isabelle Guyon

Israel Matute

Jack Harrison

Jacob Linacre

Jay Sandesara

Jean-Pierre DIDELEZ

Jerome Bobin

Joseph Carmignani

Juan Sebastian Alvarado

Judita Mamuzic

Judith Katzy

Julia Lascar

Julien Donini

Julien Girard-Satabin

Julien Zoubian

Justine Zeghal

Lee Barnby

Louis Lyons

Maciej Glowacki

Manuel Gonzalez Berges

Marco Letizia

Marion Ullmo

Mark Neubauer

Marta Felcini

Martin de los Rios

Marylou Gabrié

Masahiko Saito

Mathias Josef Backes

Mikael Kuusela

Milind Purohit

Mykyta Shchedrolosiev

nacim belkhir

Nadav Michael Tamir

Natalia Korsakova

Nathan Huetsch

Nicolas BRUNEL

Olaf Behnke

Paolo Calafiura

Patricia Rebello Teles

Philipp Windischhofer

Pranjupriya Goswami

Radi Radev

Rafal Maselek

Redouane Lguensat

Ricardo Barrué

Roberto Ruiz de Austri

Rodrigo Carvajal

Roel Meiburg

Rui Zhang

Rukshak Kapoor

Santiago Peña Martinez

Sascha Diefenbacher

Sebastian Bieringer

Sebastian Schmitt

Simone Scardapane

Stefano Giagu

Steven Calvez

Theodore Avgitas

Thomas Bultingaire

Thomas Vuillaume

Tiago Batalha de Castro

Tommaso Dorigo

Trygve Buanes

Valérie Gautard

Viaud Benoit

Vidya Manian

Vincent Alexander Croft

Vincent CHABRIDON

Vincent Garot

Wahid Bhimji

Wassim KABALAN

Won Sang Cho

Xi Wang

Xuanlong Yu

Yaron Ilan

Yiming Abulaiti

Yong Sheng Koay

Yuan-Tang Chou

zahra mohebtash

Zakia BENJELLOUN-TOUIMI

Zhihua Liang

- +100